How to Use Google Search Console, A Guide For 2021 (New Features Included)

- December 1, 2020

- Uncategorized

Google Search Console is often neglected in favour of its more glamorous and well-known cousin, Google Analytics. While Analytics is of course essential for top-level reporting, Search Console provides a raft of granular data not available in analytics.

Formerly named ‘Google Webmaster Tools’, Search Console has gradually improved over the last few years, adding several features that make SEO’s lives a little bit easier (or a little bit more difficult, depending on how you look at it!).

With 2021 approaching, and a few new features added very recently, I thought It’d be a good time to revisit Search Console, outline the most important features and explain the new ones for anyone new to the platform, here goes!

Performance

Search Results

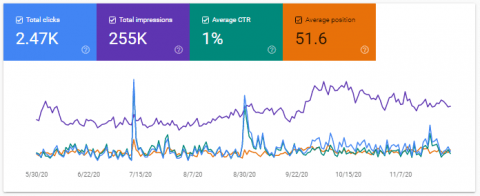

Here you’ll be able to see how many clicks and impressions your site received, as well as the average position and CTR for whichever time period you select, up to a maximum of 16 months for Google web results.

I would recommend checking this report on a weekly basis at the very least, the more often you check it, the more quickly you’ll spot any issues or potential issues.

For example, if your clicks drop below their ‘normal’ rate for the time of year, you can start investigating -e.g. was the drop in clicks down a drop in impressions, CTR, average position or a combination of any of the three?

How do you know what your ‘normal’ level of clicks is?

Looking at the level of clicks over the past 6 months should give you a good idea if your site is one that experiences relatively stable traffic year-round, if not, comparing year over year can give you a good idea of the kind of traffic levels you should be expecting – of course, major events like a global pandemic could change this for the better or worse.

In the performance module, there are numerous filtering options that allow you to get much more granular, for example, you can see how an individual query, page, country or device performs for your site over time.

These filtering options are incredibly useful for diagnosing more complex issues that may be related to a particular page or set of pages, or a particular query.

Query data is what really sets Search Console apart from Analytics, of course you can’t track conversions on Search Console so it’s not a replacement for the ‘not provided’ data, but it’s better than just using Analytics and having potential ‘blind spots’.

Search Console can be integrated with Analytics so you can get your query data pulled through. More on how to integrate Search Console with Analytics here.

Discover

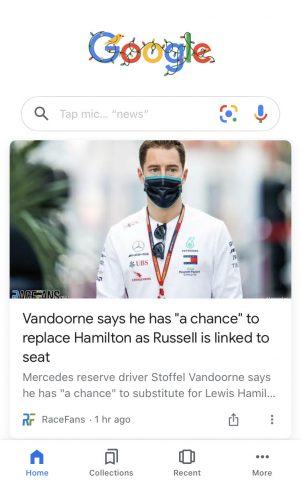

An often overlooked sera of search console is the ‘Discover’ sub-section for ‘Performance’. Google Discover is part of the Google Search app – i.e. links that appear underneath the search bar

These links are personalized for each user based on their search history. Site owners may have less control over how much traffic they get from the Discover Feed, but Google do offer some advice on how to make your content more likely to appear

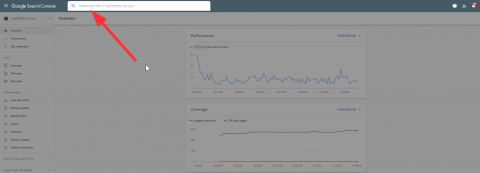

URL inspection

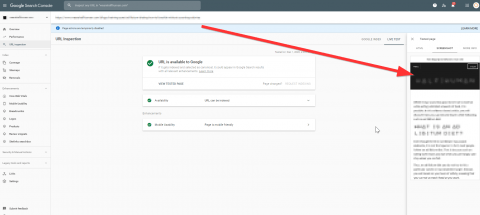

This tool can be used to diagnose individual URLs that be presenting crawling, indexing, rendering or ranking issues.

Simply paste a URL into the top search bar and hit enter.

From here you can get several useful pieces of data about the URL in question including whether the page is indexed, whether it’s included in the XML Sitemap if it’s mobile friendly and if it contains any structured data (And whether that structured data is valid).

Perhaps most usefully, you can view the HTML and a screenshot of the live page

This will help you diagnose any issues. For example, is the page indexed but not ranking as well as you believe it should be on the competition? Test the live URL and check if there are any resources that can’t be loaded which may be impacting Google’s ability to understand the page.

N.B. You can also test URLs using the mobile-friendly testing tool, which can be used independently of Google Search Console

Index

Coverage

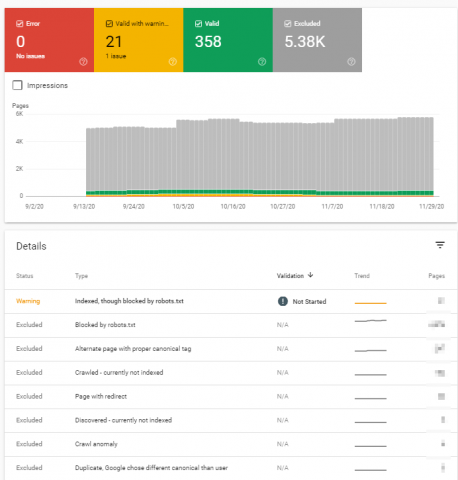

An often overlooked but crucial data source in Search Console is the ‘Coverage Report’.

This essentially tells webmasters how many of their URLs are eligible to appear in Google Search Results. In this report, URLs are split into for different categories

Valid – These are URLs that are indexed in Google- Search Console will let you know where these are currently included in the XML sitemap or not. Any URLs that you want to be indexed should be in the XML sitemap.

Valid with Warnings – These are URLs that are indexed, but blocked by robots.txt. If you have any URLs in this category they should be audited to check whether you do actually want them in the index. If you do, the block should be removed. If not, the block should still be removed and a noindex tag (or canonical, in some cases) should be added.

Error – A URL might be classed as having an ‘error’ for any number of reasons, e.g. it may be blocked, noindexed, a soft 404, or returning something other than a 200 status code (aside from redirects, more on those in a second)

Excluded – These are URLs that Google has discovered but excluded from the index. The reasons for exclusion can be numerous, but some of the most common examples are redirected URLs, Canonicalised URLs, or URLs that Google has excluded for an unspecified reason – this could be because some of the resources required to render the page are blocked, for example.

For more information on individual URLs, use the Mobile-Friendly Test Tool, or URL Inspection in Search Console (see above).

Sitemaps

Possibly the most important part of Search Console for anyone setting up a new site is the Sitemaps module. Here, you can submit the URL of your sitemap, which is arguably the quickest to get your URLs crawled and indexed, particularly for brand new sites.

You can also monitor how many of the URLs in each Sitemap have been discovered, and then click on each one see the coverage split (for more on coverage, see above).

Removals

If you have a URL indexed that you want removing quickly, you can submit removal requests here. In my experience, doing this will remove a URL from Google within a few hours.

N.B. The removal request expires after 6 months, so this is not a permanent fix, for a more permanent solution, URLs can be blocked in the robots.txt file or a no-index tag can be added.

Enhancements

Core Web Vitals

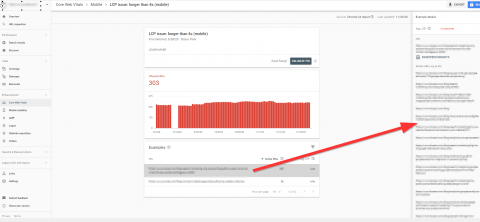

A relatively recent addition to search console, the Core Web Vitals Report is split for Mobile and Desktop and should be your first of port of call for diagnosing Core Web Vital issues.

Why?

Because Google aggregates groups of URLs that have similar issues.

Even if you had a site with 100 URLs, auditing each one for CWV issues would be a laborious and time-consuming task.

The GSC CWV report breaks URLs down by an overall rating; ‘Good’, ‘Need Improvement’, and ‘Poor’. Once you drill down by rating, you can then investigate which particular CWV is the problem – LCP, FID or CLS.

You can then look at groups of similar URLs that Google believes to have the same (or similar issues).

Once you know this, you can then do a more detailed analysis on CWV performance using PageSpeed Inights, Lighthouse, or a 3rd party tool such as GT Metrix.

Mobile Usability

Just because Core Web Vitals are new and shiny doesn’t mean you neglect other important usability factors. Mobile Friendliness is part of the overall ‘Page Experience’ part of the algorithm and thus is arguably as important and your Core Web Vital Stats.

The Mobile usability report will breakdown URLs into either ‘Valid’ or ‘Error’ categories.

Errors may fall in a few different categories; some common ones are ‘text too small to read’ and ‘clickable elements too close together’. Since most sites are designed with mobile-first in mind, these type of errors should become less common as time goes on, but it’s always good to keep your eye on.

AMP

If you use AMP pages on your site, this report will tell if you there are any errors with those pages specifically.

As with many other categories, URLs are split into ‘Error’, ‘Valid with Warnings’ and ‘Valid’. If you have pages with errors, this is likely to impact the visibility of the AMP pages, so ensure the implementation and mark up is correct.

Links

If you don’t have access to any 3rd party link analysis tools, the ‘Links’ section in Google Search Console may provide useful.

Here you can discover the total number of external links you have, the top anchor texts of these external links and how many times each one is used, and the total number of internal links each page has.

The data is certainly rich enough to act upon, for example, you may well spot that you have an important page on the site which has very few internal links, you could then work to increase the number of internal links on that page.

The amount of insight on offer here however pales into insignificance when compared to a ‘full fat’ backlink analysis tool like Ahrefs. A tool like this will offer so much more useful data, for example, you’ll be able to aggregate all the links that are pointing at your site from a particular domain, get an idea of how powerful (valuable) each link is, and view all links pointing to your site with the same anchor text.

If you do have the budget for Ahrefs I’d highly recommend it. Google falls a little short when it comes to reporting links (it isn’t of course their prerogative to do so).

Crawl Stats

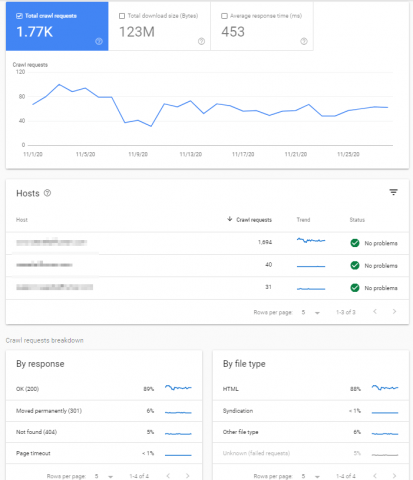

The Crawl Stats report is brand new as of November 2020, but oddly hidden away within the ‘Settings’ module of Search Console.

This is a huge improvement on the legacy Webmaster Tools ‘Crawl Stats’ report which only provided very topline metrics on how many pages were being crawled per day.

The new report does this, as well as breaking down the data by file type, response code, bot type (only Googles bots, obviously), and whether the URLs were newly discovered or already-known URLs being re-crawled.

Why is this more useful?

Many different reasons, but mainly because the richer data provides more opportunity for analysis. For example, it’s all well and good crawling your site and discovering you only have a couple of 404 errors, but if these 404 URLs are being crawled regularly by Google, this could be a problem.

Will this be a substitute for log files?

Probably not – there are still limitations, mainly that as mentioned, this is only Google crawl data; your site will be crawled by many other aside from Google. It’s also currently unclear as to how far this data goes back in time – it may only be 3 months, if this is the case then comparing crawl data YoY to diagnose problems won’t be an option.

Summary

Google Search Console should be your one-stop-shop for diagnosing your site’s performance. There are of course other excellent 3rd party tools that exist that offer much broader data when it comes to rankings and links, but you should certainly pay attention to Search Console as your primary source of data given that most of us are trying to rank in Google’s search engine.

The post How to Use Google Search Console, A Guide For 2021 (New Features Included) appeared first on Koozai.com

About us and this blog

We are a digital marketing company with a focus on helping our customers achieve great results across several key areas.

Request a free quote

We offer professional SEO services that help websites increase their organic search score drastically in order to compete for the highest rankings even when it comes to highly competitive keywords.

Subscribe to our newsletter!

More from our blog

See all postsRecent Posts

- Web Hosting September 26, 2023

- Affiliate Management September 26, 2023

- Online Presence Analysis September 26, 2023